A temporary table is one that is intended to be used only for a given session. For example, if you create a normal table, you expect the table to remain persisted on the database until you purposefully remove it. Each time you log in, you expect that the table is available and queryable. This isn’t the case with a temporary table. You create this kind of table using syntax like the following snippet. Notice the hash tag before the table name; this is what identifies the table as temporary.

CREATE TABLE #MODE([MODE_ID] INT NOT NULL,[MODE] NVARCHAR (50) NOT NULL)

Temporary tables are only visible to the session owner. No one else will be able to query the contents of the table. Use this type of table to hold data that is in an intermediate transformation stage.

Sink Table

A sink table is often also referred to as a data sink. Again, the name itself can be used to describe its purpose. Like an ordinary sink, you normally have water running in it, but sometimes you stop the outflow and sometimes you stop the inflow. Instead of water flowing in and out, it is data. Consider a sink table as one that can store data as you copy it into your data lake or data warehouse. It is similar to a temporary table; however, the main difference is that the data is stored in a storage account like ADLS instead of a SQL table.

Create External Table as Select

You have already learned what a CTAS is, and it is very similar to Create External Table as Select (CETAS). Instead of a regular table, which is created with CTAS, an external table can be created using the SELECT statement. You simply need to add the key word EXTERNAL to the SQL command and configure a few specific attributes. The following is an example of a CETAS statement. Note that you can also create an external table without the SELECT statement, just as you would a typical database table.

CREATE EXTERNAL TABLE MeditationWITH( LOCATION = ‘SessionCSV/Meditation/POW’, DATA_SOURCE = Meditation_Source,FILE_FORMAT = BrainwaveCSVFormat)ASSELECT *FROM ‘abfss://<uid>@<accountName>.dfs.core.windows.net’

A CETAS statement is a way to copy data from a file into a table so that you can run SQL queries against the data. While we’re at it, here is another way to copy some data from a file stored in an Azure Storage account container into a table for querying using the COPY INTO SQL statement:

COPY INTO [dbo][.READING]FROM ‘https://<accountName>.blob.core.windows.net/SessionCSV/Meditation/POW’WITH(FILE_TYPE = ‘CSV’, FIELD_TERMINATOR = ‘͵’)

The arguments described in the previous snippet are fully discussed in the next section on external tables.

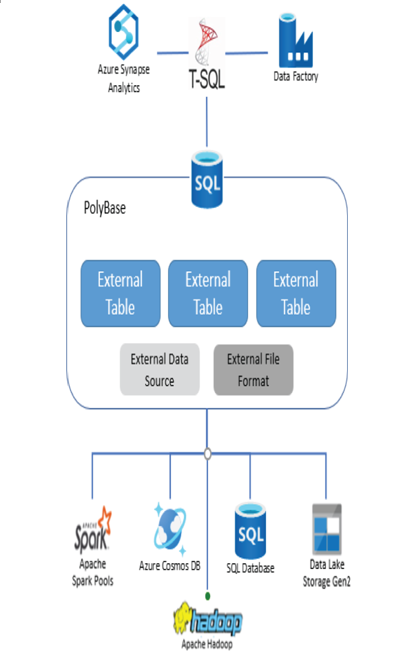

External tables provide the ability to perform SQL queries on sources stored on many different data storage products. For example, from a dedicated SQL pool, you could query data originally stored on Hadoop or ADLS. Querying data from IBM DB2, SAP Hana, Azure Cosmos DB, and Oracle datastores is also possible after the data has been placed into external tables. The feature that facilitates the connectivity between Azure Synapse Analytics and those other data sources is called PolyBase. The SQL syntax later in this section, where you create the external table, the data source, and file format, automatically implements and uses PolyBase. PolyBase is a feature that works behind the scenes without getting much recognition and visibility of the significant capability it provides. An overview of the external table concept is illustrated in Figure 2.12.

Before you can query data from those other sources, you need to set up the external data source, the external file format, and the external table. Keep in mind that this is performed in Azure Synapse Analytics. Consider the following an introduction; hands‐on exercises are offered later in this book. The syntax to create a data source is illustrated with the following syntax:

CREATE EXTERNAL DATA SOURCE Meditation_SourceWITH (LOCATION = ‘https://<accountName>.dfs.core.windows.net/<container>/<path>’,TYPE = HADOOP);

FIGURE 2.12 Azure Synapse Analytics external tables

This SQL command creates a data source named Meditation_Source using data files hosted on an ADLS file share. When TYPE is set to HADOOP, it instructs the platform to use the Java‐based technology to access the files.